Least squares regressions¶

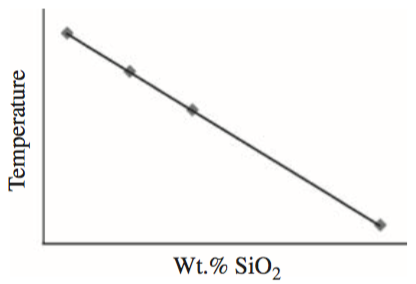

Least squares regressions are a common way of determining whether two values are linearly related to one an other. In other words, this is a method to determine whether a line is a good “fit” to some measured values. Not all data should be expected to be fit well by a line, but linear regressions are a powerful method for determining cases when two variables are directly related to one another. A common example might be the temperature at which magma erupts versus the SiO2 content of the magma, as shown below in Figure 2.

Figure 2. Eruption temperatures of magmas as a function of their SiO2 content with a linear regression line. Source: Figure 16.1 from McKillup and Dyar, 2010.

The general idea with calculating a linear regression is that we want to find the equation of a line that best fits some x-y data, such as temperature and SiO2 content in the example above. To do this, we first need to recall the equation for a line:

where \(x\) and \(y\) are the coordinates of the data points, \(A\) is the \(y\)-intercept, and \(B\) is the slope of the line.

Thus, in order to calculate a “best fit” line to some data, we will need to determine the values of the constants \(A\) and \(B\). Consider the example below in which \(A\) and \(B\) are known. If we make the rather common assumption that the uncertainties for the values on the \(x\) axis are negligible compared to the uncertainties along the \(y\) axis, we can say:

Thus, it is possible to find the value of \(y\) for two linearly related values when \(A\) and \(B\) are known.

Finding the values of \(A\) and \(B\) then for the case of a linear regression to some x-y data is fairly straightforward, though it does involve a bit of algebra. For our purposes, I’ll refer you to Chapter 8 of Taylor, 1997 for a complete derivation of how to find \(A\) and \(B\), and simply present the relevant equations below. The value of the \(y\) intercept can be found using:

\(x\) is \(i\)th data point plotted on the \(x\)-axis, \(y\) is the \(i\)th data point plotted on the \(y\)-axis, and \(\Delta\) is defined below.

The line slope can be found using:

where \(N\) is the number of values in the regression.

And the value of \(\Delta\) is:

With the equations above, you are now able to calculate unweighted regression lines, the best-fit lines to some x-y data in which the uncertainties in the measurements are not considered to influence the fit of the line. It is also possible to fit regression lines that consider the variable uncertainties in the data, referred to as weighted regressions, but will will not consider that type of regression for the time being.